July 31, 2025

Fixing Flawed Glare Predictions in Lighting Design

A proposed new model that aligns glare simulations with human perception, no massive data files required

For decades, lighting designers have relied on simulation tools to predict glare using simplified photometric data. But real-world results often tell a different story. A new study by researchers at Poznań University of Technology in Poland and published in LEUKOS, proposes a solution that could finally align simulation with reality — without burdening design workflows with terabytes of data.

Glare metrics like the Unified Glare Rating (UGR) depend on luminance: how bright a luminaire appears from a specific vantage point. But most simulation tools, like Dialux and Relux, calculate luminance from IES or LDT files using only luminous intensity and projected surface area. This assumes a uniform glow from the entire luminaire surface — an assumption that’s increasingly flawed in the era of micro-prismatic lenses and asymmetric optics.

Add to this the limitations of imaging luminance measurement devices (ILMDs): depending on the camera sensor, focal length, and distance from the luminaire, different systems can report drastically different luminance values. That discrepancy directly affects UGR calculations, leading to mismatched predictions between the digital model and physical space.

A Smarter, Lighter Model for Real-World Glare

The Polish research team tackled this mismatch by creating a new digital model that integrates real luminance data — captured by ILMDs — and compresses it into an extended IES file format that remains compatible with standard design software. Instead of storing full HDR images (which can exceed 1 TB), the model saves luminance descriptors: average, max, and min values within defined contours where luminance exceeds 1%, 10%, or 50% of the peak.

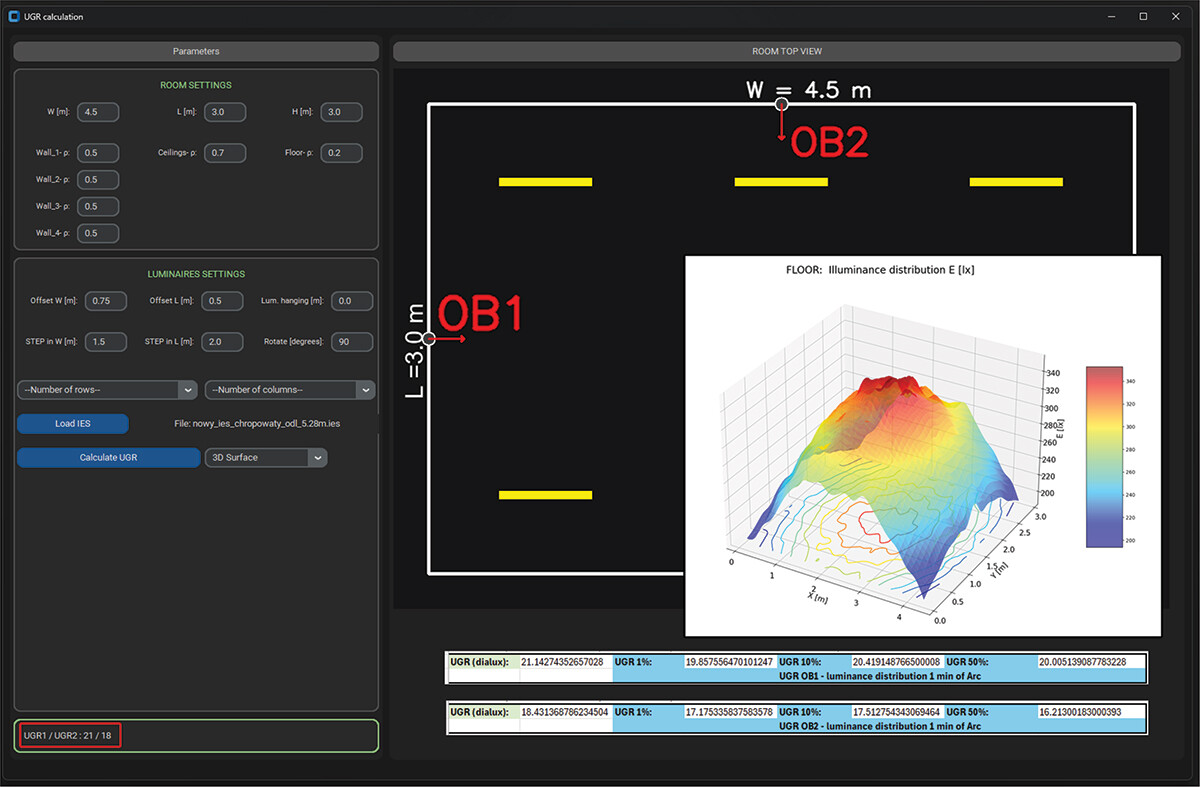

Above: Appearance of the original UGR calculation application developed for the project (OB1 - UGR1 observer, OB2 - OB2 observer). Image credit: LEUKOS

Crucially, they also introduced a distance-aware correction factor. Because a camera’s field of view per pixel grows with distance, the apparent luminance drops. Their system compensates by anchoring glare calculations to a 1-minute arc field of view — roughly matching the resolution of the human eye. This enables accurate, repeatable UGR values whether measured or simulated, across varying luminaire types and observation distances.

A European Focus, Possible North American Implications

To validate their model, the authors used Dialux and Dialux Evo—lighting simulation platforms widely adopted across Europe and integrated into EN-standard workflows. However, the study did not test the new luminance model within AGi32 or Visual, two dominant tools in the North American market.

That omission might matter. While the proposed enhancements are backward-compatible with standard IES file structures, AGi32 and Visual rely on their own glare calculation engines. Whether those platforms can interpret the extended luminance descriptors — like mean and max luminance within adaptive angular contours — is currently unverified.

For now, the model remains a proof-of-concept, though it’s fully backward-compatible with existing IES infrastructure. The broader challenge will be industry adoption — especially convincing North American software developers to embrace a more precise, human-centered definition of glare.